AI Agent Memory: Short-Term, Long-Term & Structured

Synalinks Team

AI Agent Memory Architecture: Short-Term, Long-Term, and Structured Knowledge

Every AI agent has a memory problem. The language model at its core has no persistent state. Each request starts from scratch. Without an external memory system, your agent can't remember what happened five minutes ago, let alone reason over months of accumulated knowledge.

The agent framework ecosystem has responded with a variety of memory solutions: chat history buffers, vector stores, key-value caches, and more recently, knowledge graphs. But these aren't interchangeable. They solve different problems, and most production agents need more than one.

This guide breaks down the three layers of agent memory, what each one does, where the current tools fall short, and how to build an architecture that actually works at scale.

Layer 1: Short-term memory (conversation context)

Short-term memory keeps track of the current interaction. It's the reason your agent knows what "it" refers to when you ask a follow-up question.

How it works: The most common implementation is a chat history buffer. Each message in the conversation is stored and passed to the language model as context on every subsequent request. Some frameworks (LangChain, LangGraph) offer sliding window buffers that keep the last N messages, or summary buffers that condense older messages into summaries.

What it solves:

- Multi-turn conversations where context carries over

- Follow-up questions that reference earlier parts of the conversation

- Task continuity within a single session

Where it falls short:

- Memory is lost when the session ends

- Context window limits cap how much history you can include

- No mechanism for retaining important facts across sessions

- Summarization loses detail: the summary of a 20-message conversation doesn't preserve every data point discussed

Short-term memory is a solved problem for most use cases. If your agent only needs to handle single-session conversations, a chat history buffer is sufficient.

Layer 2: Long-term memory (persistent recall)

Long-term memory allows the agent to remember information across sessions. A customer success agent should remember that Client X prefers email communication and had an issue with billing last quarter, even if those facts came up weeks ago.

How it works: The typical approach is to store important facts in a vector database or key-value store. After each conversation, relevant information is extracted and embedded. On future requests, the system retrieves stored memories that are semantically similar to the current query.

What it solves:

- Cross-session continuity: the agent remembers past interactions

- User personalization: preferences and patterns are retained

- Accumulated context: the agent gets smarter over time as it stores more

Where it falls short:

- Retrieval is probabilistic. The system returns memories that are similar to the current query, not necessarily the most relevant or most recent ones

- No structure. Memories are stored as flat text or embeddings. The system doesn't understand relationships between memories

- No reasoning. You can retrieve a memory, but you can't ask "which of my memories contradict each other?" or "what changed since the last time I saw this customer?"

- Staleness. Old memories don't automatically update when the underlying reality changes. The agent might remember a fact that's no longer true

- Scale degrades quality. As the memory store grows, retrieval becomes noisier. More memories means more potential for irrelevant or contradictory results

Long-term memory is where most agent architectures start to struggle. The tools exist, but they treat memory as a retrieval problem. They answer "what do I remember that's similar to this?" when the agent actually needs to answer "what do I know that's relevant to this, and how does it connect to everything else I know?"

Layer 3: Structured knowledge (reasoning-ready memory)

Structured knowledge is the layer that most agent architectures are missing. It's the difference between an agent that recalls information and an agent that understands it.

How it works: Instead of storing memories as text or embeddings, knowledge is organized into entities, relationships, and rules:

- Entities are typed objects: Customer, Order, Product, Policy, with defined properties

- Relationships connect entities: Customer placed Order, Order contains Product, Product is manufactured by Supplier

- Rules encode domain logic: "at risk" = usage dropped 40% over 90 days AND has open critical tickets

This creates a knowledge graph that the agent can reason over, not just retrieve from. For a deeper comparison of retrieval vs reasoning, see our RAG vs Knowledge Graphs guide.

What it solves:

- Multi-hop reasoning: "Which customers of suppliers with delivery delays placed orders that are now overdue?" requires traversing multiple relationships. A knowledge graph handles this natively

- Temporal reasoning: "What changed in this customer's account since last quarter?" requires tracking state over time, not just retrieving the latest snapshot

- Deterministic answers: The same query over the same knowledge graph produces the same result. No variation, no hallucination on structured facts

- Traceability: Every answer comes with a reasoning chain showing which entities, relationships, and rules produced it

- Consistency: Knowledge is verified and contradictions are resolved at ingestion time, not at query time

Where it falls short:

- Requires more upfront investment in modeling the domain

- Less suitable for purely unstructured, free-form information

- The ecosystem is smaller and newer compared to vector-based approaches

How the layers work together

A well-architected agent memory system uses all three layers, each handling what it does best:

Short-term memory provides the conversational context: what's the user asking, what did they say before, what's the current task?

Long-term memory provides recalled context from past interactions: what has this user discussed before, what are their preferences, what happened last time?

Structured knowledge provides the domain truth: what are the actual facts, how do entities relate to each other, what rules apply?

The reasoning happens at the structured knowledge layer. Short-term and long-term memory inform the query, but the answer is derived from verified knowledge through defined rules.

Choosing the right architecture for your agent

If your agent handles single-session conversations (a chatbot that answers questions from docs): short-term memory + vector RAG is sufficient.

If your agent needs to remember users across sessions (a personal assistant, a customer-facing bot): add long-term memory with a persistent store.

If your agent needs to reason over domain data and produce reliable, verifiable answers (a customer success agent, an analytics agent, a compliance system): you need structured knowledge. This is non-negotiable if wrong answers have business consequences.

Most teams start with layers 1 and 2 and hit a wall when they need layer 3. The symptoms: inconsistent answers, hallucinations on domain-specific questions, inability to explain how the agent reached a conclusion, and accuracy that degrades as the knowledge base grows.

How Synalinks Memory fits in

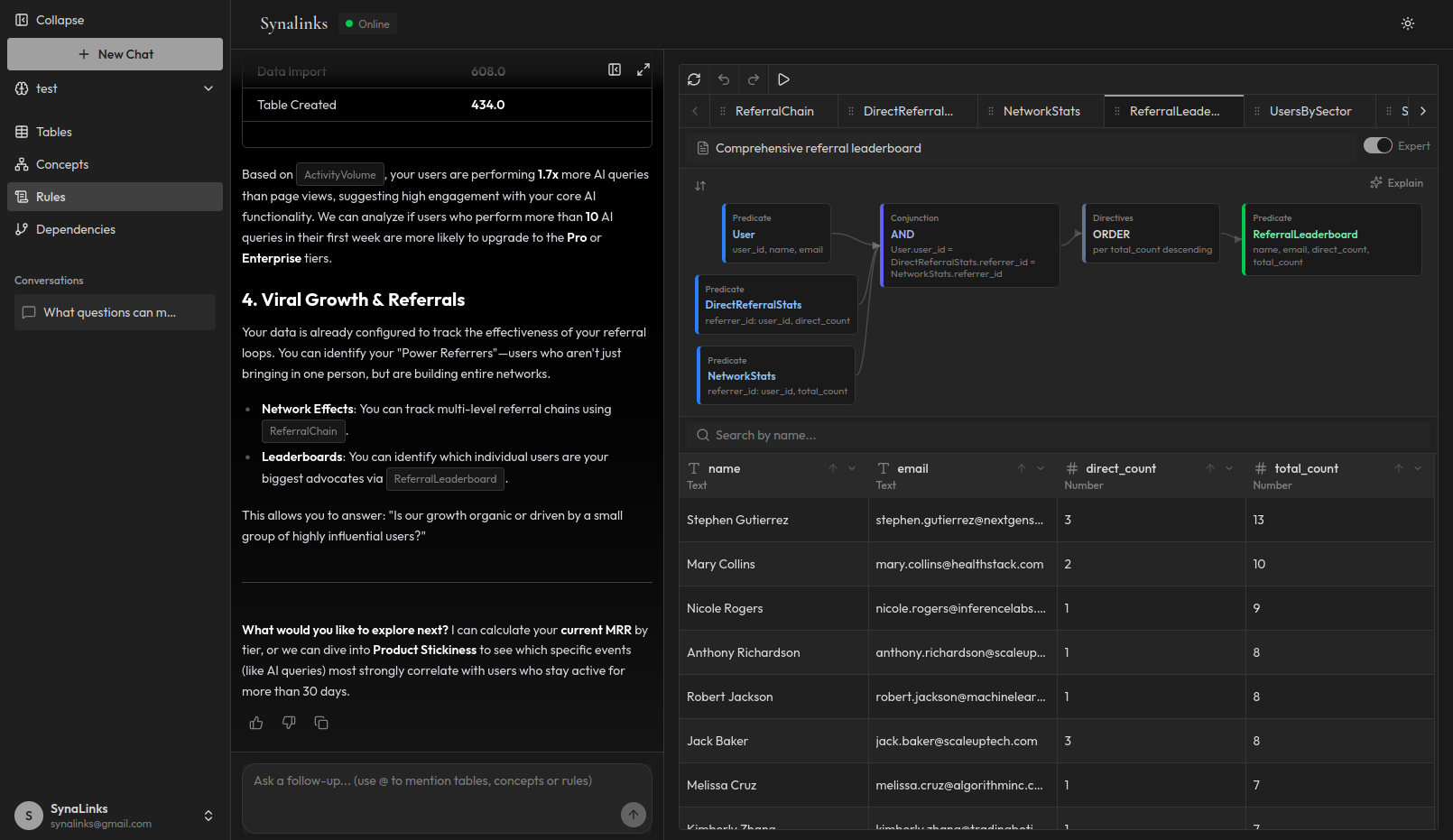

Synalinks Memory is a layer 3 solution: structured knowledge with deterministic reasoning, designed to integrate with whatever you're using for layers 1 and 2.

It takes your data sources (databases, spreadsheets, files, APIs), automatically structures them into entities, relationships, and rules, and provides a reasoning engine that your agent can query through a simple API.

You keep your existing chat framework for short-term memory. You keep your existing vector store for long-term recall if you need it. Synalinks Memory adds the structured knowledge layer that makes your agent's answers deterministic, traceable, and correct.

It's the architectural piece that turns an agent that remembers into an agent that knows.