Traceable AI: Make Your Agents EU AI Act Ready

Synalinks Team

Traceable AI: How to Make Your Agents Audit-Ready Before the EU AI Act Deadline

The EU AI Act enters full enforcement in August 2026. For companies running AI agents in high-risk domains (healthcare, finance, HR, legal, critical infrastructure), this isn't a future concern. It's a deadline with teeth.

The regulation requires that high-risk AI systems provide meaningful explanations of their decisions, maintain detailed logs for audit purposes, and allow human oversight of automated processes. The penalties for non-compliance reach up to 35 million euros or 7% of global annual turnover.

Most AI agents today can't meet these requirements. Not because the teams building them don't care about compliance, but because the underlying architecture makes traceability structurally impossible.

Here's what needs to change, and how to change it.

What the EU AI Act actually requires

The regulation is lengthy, but the requirements relevant to AI agents in production boil down to three pillars:

1. Explainability

Article 13 requires that high-risk AI systems are "designed and developed in such a way as to ensure that their operation is sufficiently transparent to enable deployers to interpret the system's output and use it appropriately."

In plain terms: when your AI agent produces an answer or makes a decision, you need to be able to explain how it got there. Not in vague terms ("it analyzed the data") but in specific, traceable terms ("it applied rule X to data point Y and derived conclusion Z").

2. Record-keeping

Article 12 mandates "automatic recording of events (logs) over the lifetime of the system." These logs must be detailed enough to allow for post-hoc auditing of the system's decisions.

In plain terms: every decision your agent makes needs to be logged with enough detail that an auditor can reconstruct the reasoning chain months or years later.

3. Human oversight

Article 14 requires that high-risk AI systems "can be effectively overseen by natural persons during the period in which the system is in use."

In plain terms: humans need to be able to understand what the agent is doing, verify its outputs, and intervene when necessary. This requires transparency in the reasoning process, not just the final output.

Why most AI agents fail these requirements

The standard AI agent architecture, a language model with RAG retrieval, is fundamentally opaque:

The retrieval step is explainable. You can log which documents were retrieved and their similarity scores. This part isn't the problem.

The reasoning step is a black box. Once the retrieved context is sent to the language model, what happens inside is not inspectable. The model produces an output, but the intermediate reasoning, the decisions about what's relevant, the logic connecting evidence to conclusion, none of this is captured in a structured, auditable form.

You can log the input (query + retrieved context) and the output (the response). But you can't log the reasoning that connected them. And that reasoning is exactly what the EU AI Act requires you to explain.

Some teams attempt to solve this with chain-of-thought prompting: asking the model to "show its work." But chain-of-thought outputs are themselves generated text. They're the model's description of reasoning, not a recording of reasoning. They can be fabricated, inconsistent, or incomplete. An auditor asking "prove this reasoning chain is accurate" would find nothing to verify it against.

What audit-ready AI looks like

An AI agent that meets the EU AI Act requirements needs three properties:

Deterministic reasoning

The same input must produce the same output, every time. If an auditor runs the same query against the same data, they must get the same answer. This rules out any architecture where the answer depends on model temperature, context window ordering, or stochastic generation.

Structured audit trails

Every decision must produce a log entry that contains:

- The query that was asked

- The data that was used (specific entities and values)

- The rules that were applied

- The step-by-step derivation from data to conclusion

- A timestamp and version of the knowledge base

This log must be machine-readable and structured, not a prose explanation generated by the model.

Verifiable reasoning chains

An auditor must be able to take a logged decision and verify it independently. This means the reasoning chain must reference specific, inspectable rules and specific, inspectable data points. "The model determined that the customer was at risk" is not verifiable. "Rule 'churn_risk_v3' was applied to customer entity #4521, evaluating usage_delta=-47% and open_critical_tickets=2, resulting in at_risk=true" is verifiable.

Building compliance into the architecture

The path to compliance isn't adding an audit layer on top of a black-box system. It's choosing an architecture where traceability is a built-in property.

Step 1: Structure your knowledge

Move from document chunks in a vector database to verified entities, relationships, and rules in a knowledge graph. Every piece of data the agent can access should be a typed, versioned, inspectable object.

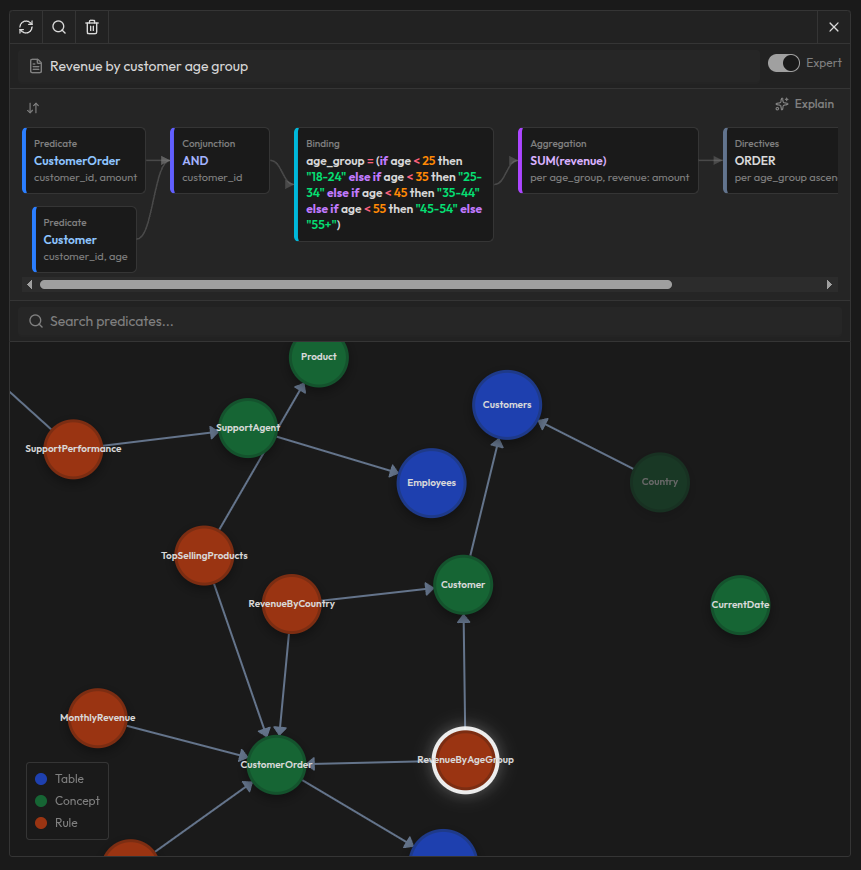

Step 2: Encode your logic as rules

Move business logic out of prompts and into first-class rule definitions. "At risk" isn't a concept the model interprets from context. It's a defined rule with explicit conditions, thresholds, and data dependencies.

Step 3: Derive answers through rule application

When a question comes in, the system applies the defined rules to the structured knowledge and produces an answer through logical derivation. The reasoning chain is captured automatically at every step. No generation, no black box, no stochastic variation.

Step 4: Log everything

Every query, every rule application, every data access, every derived conclusion goes into a structured audit log. This log is the artifact that demonstrates compliance to regulators.

The compliance advantage

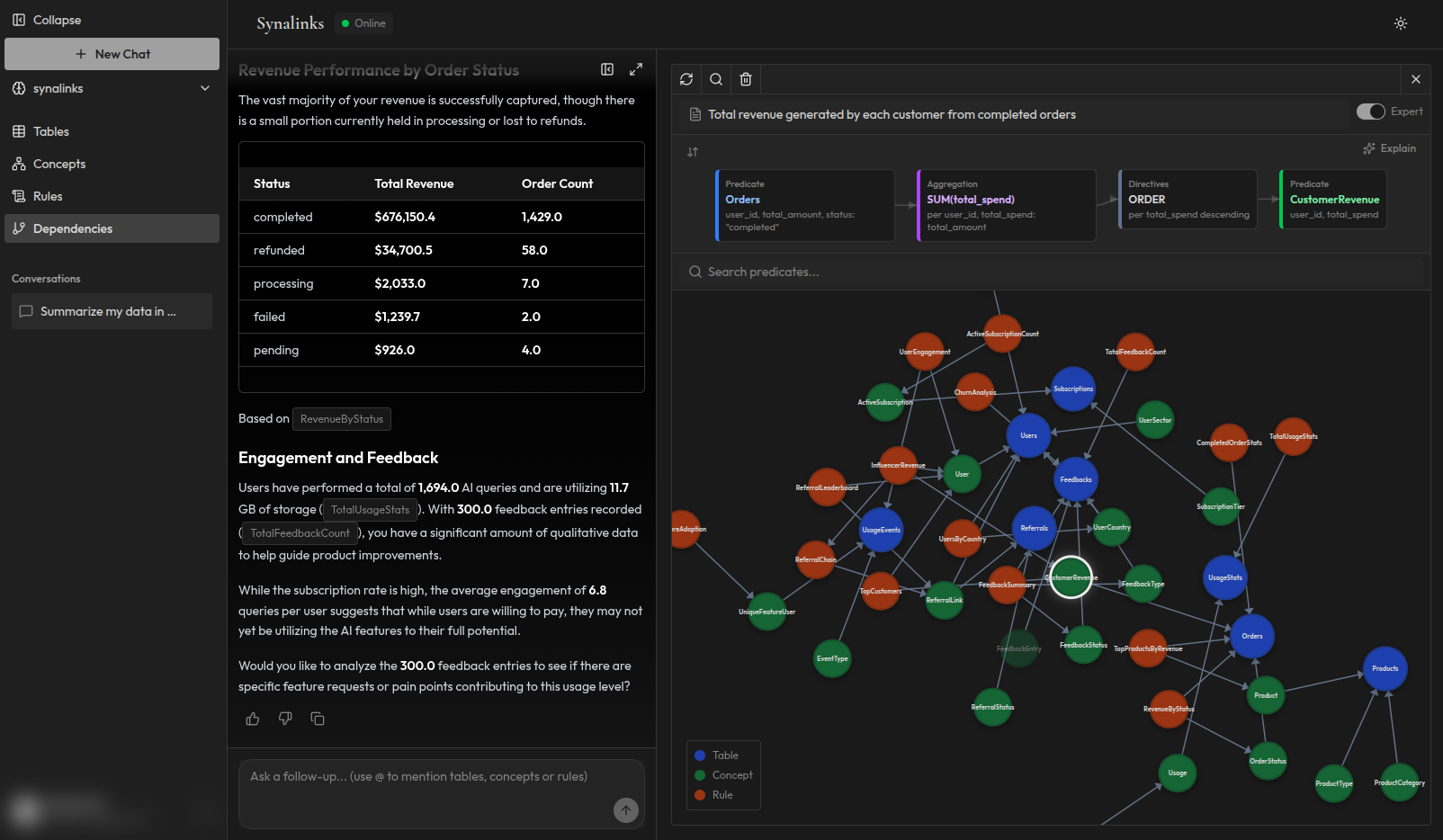

Teams that build traceability into their agent architecture don't just satisfy regulators. They get operational benefits that compound over time:

Faster debugging. When an agent produces an unexpected answer, the reasoning chain shows exactly where and why. No guesswork.

Stakeholder trust. When you can show a customer, a manager, or a board member exactly how the agent reached a conclusion, trust follows naturally.

Continuous improvement. When you can inspect every reasoning chain, you can identify patterns: which rules fire most often, which data points are most influential, where the knowledge graph has gaps.

Portability. A structured, auditable reasoning system works the same way regardless of which language model you use for the presentation layer. You're not locked into a model provider's black box.

How Synalinks Memory enables compliance

Synalinks Memory was designed with traceability as a core property, not an add-on.

Structured knowledge: Your data is organized into verified entities, relationships, and rules. Every piece of knowledge the agent accesses is typed, versioned, and inspectable.

Deterministic reasoning: Every query is answered through rule-based derivation. Same question, same data, same answer. Always.

Complete audit trails: Every answer comes with a structured reasoning chain: which rules were applied, which data points were used, and how the conclusion was derived. These are machine-readable logs, not generated prose.

Temporal versioning: The knowledge graph tracks when things changed. An auditor can ask not just "what answer did the agent give?" but "what did the agent know at the time, and was the answer correct given that knowledge?"

If your agents operate in a domain covered by the EU AI Act, or if you simply want the operational benefits of traceable reasoning, Synalinks Memory gives you audit-ready AI out of the box.

If you'd like to discuss how Synalinks Memory maps to your specific compliance requirements, reach out to us. We're happy to walk through the details.